As artificial intelligence (AI) software becomes more widely used, questions have arisen about how social biases may inadvertently be amplified through it. One area of concern is facial detection and recognition software. Biases in the data sets used to ‘train’ AI software may lead to racial biases in the end products. Since these are sometimes used in law enforcement, this raises civil rights concerns.

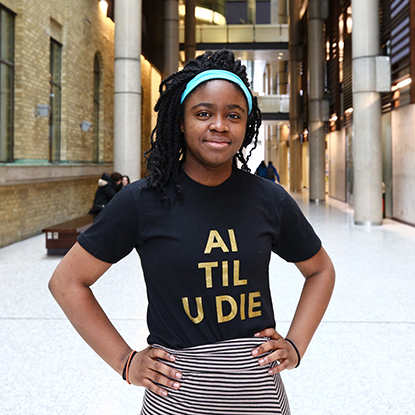

Year 4 EngSci student Deb Raji (1T8 PEY) and collaborators at the Massachusetts Institute of Technology (MIT) recently won “best student paper” at the Artificial Intelligence, Ethics, and Society (AIES) Conference in Honolulu, Hawaii, for identifying performance disparities in commonly used facial detection software when used on groups of different genders and skin tones. Using Amazon’s Rekognition software, they found that darker-skinned women were misidentified as men in nearly one-third of cases.

Raji hopes that this work will show companies how to rigorously audit their algorithms to uncover hidden biases. “Deb Raji’s work highlights the critical need to place engineering work within a social context,” says Professor Deepa Kundur, Chair of the Division of Engineering Science. “We’re very proud of Deb’s achievements and look forward to her future contributions to the field.”